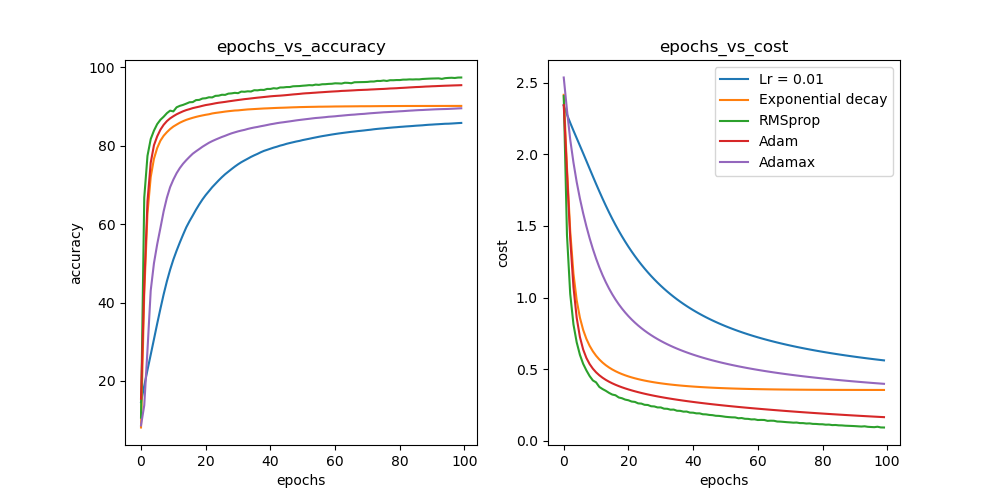

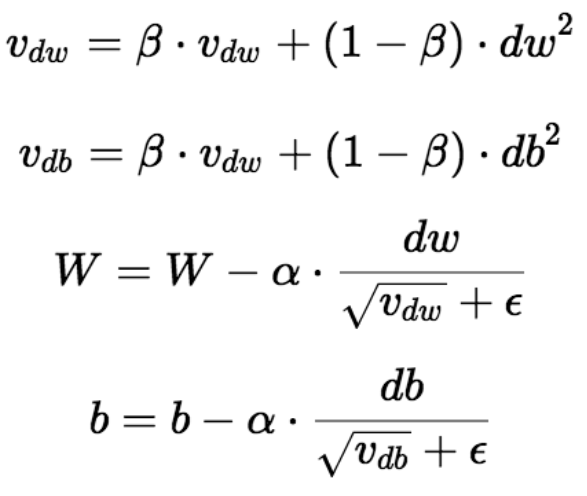

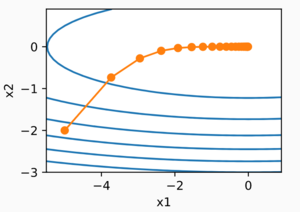

RMSprop optimizer provides the best reconstruction of the CVAE latent... | Download Scientific Diagram

![PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex Optimization and an Empirical Comparison to Nesterov Acceleration | Semantic Scholar PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex Optimization and an Empirical Comparison to Nesterov Acceleration | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f0a8159948d0b5d5035980c97b88038d444a1454/9-Figure3-1.png)

PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex Optimization and an Empirical Comparison to Nesterov Acceleration | Semantic Scholar

Florin Gogianu @florin@sigmoid.social on Twitter: "So I've been spending these last 144 hours including most of new year's eve trying to reproduce the published Double-DQN results on RoadRunner. Part of the reason

GitHub - soundsinteresting/RMSprop: The official implementation of the paper "RMSprop can converge with proper hyper-parameter"

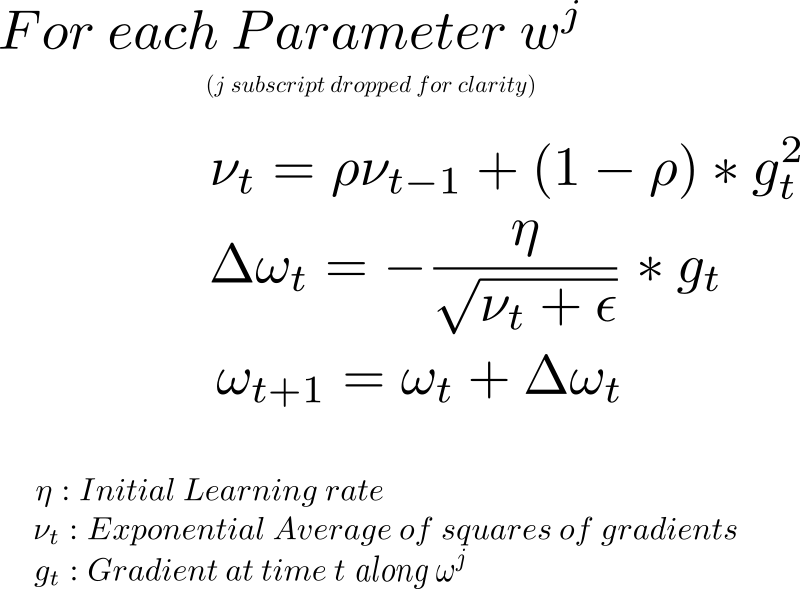

CONVERGENCE GUARANTEES FOR RMSPROP AND ADAM IN NON-CONVEX OPTIMIZATION AND AN EM- PIRICAL COMPARISON TO NESTEROV ACCELERATION

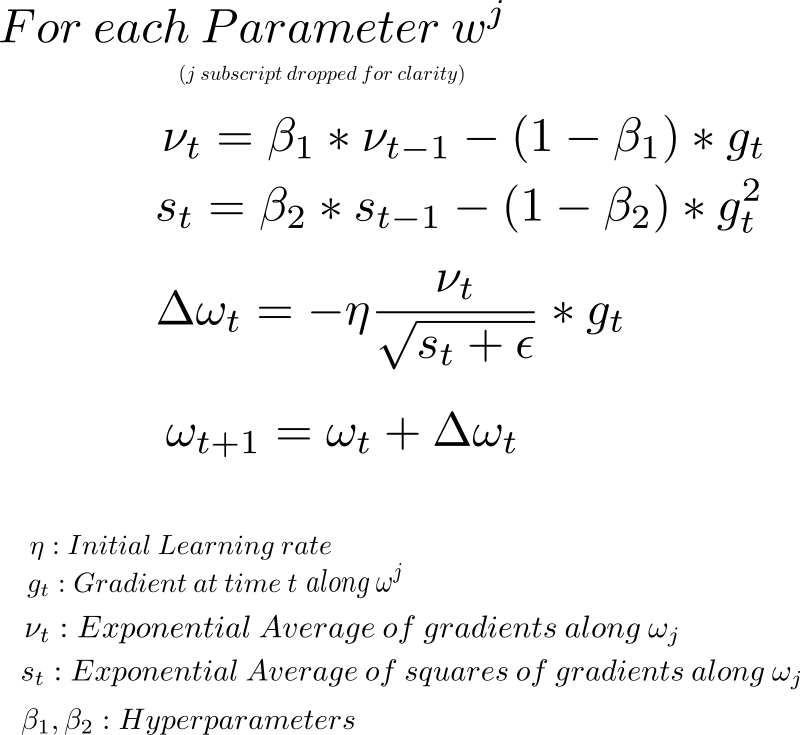

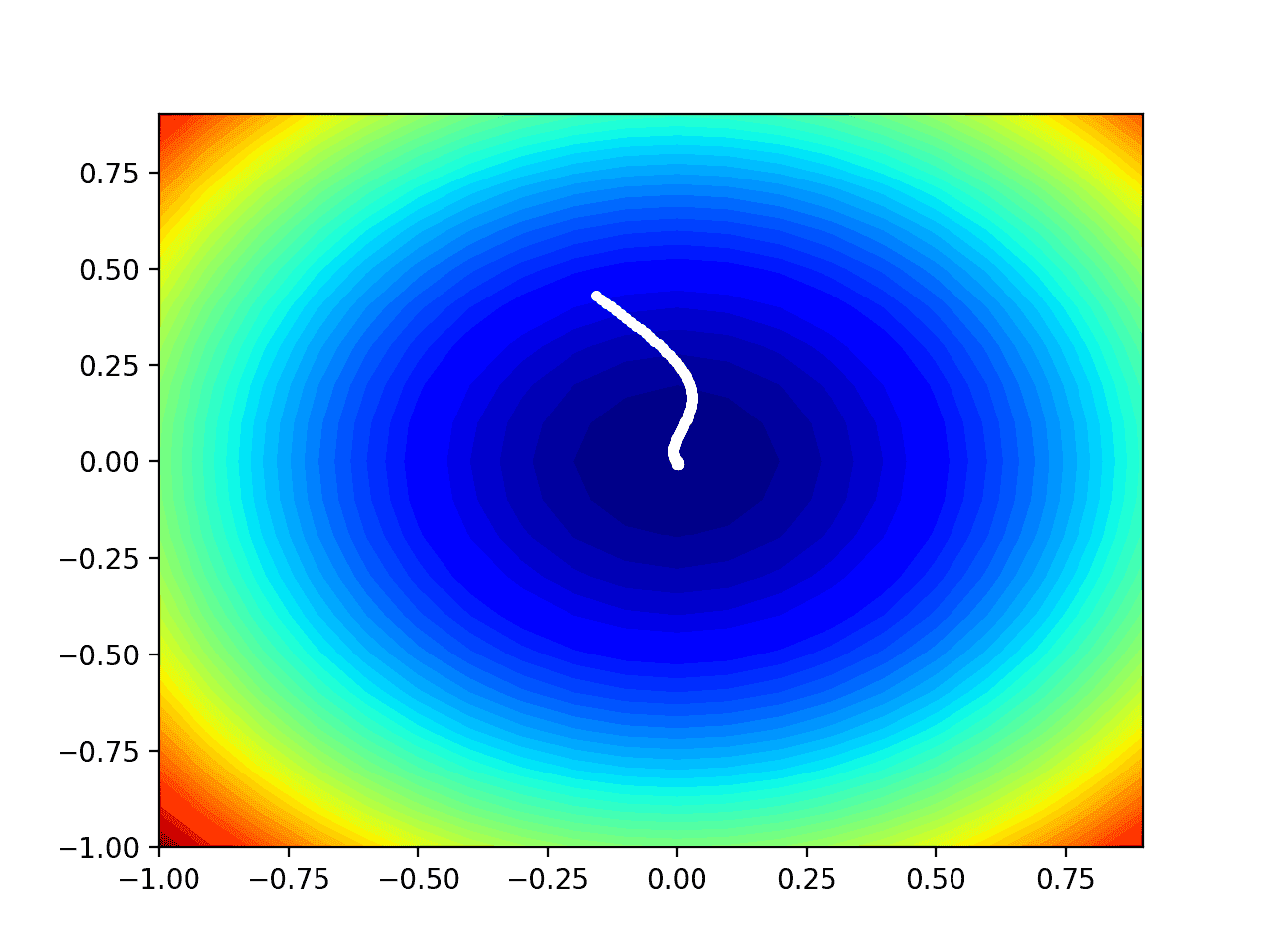

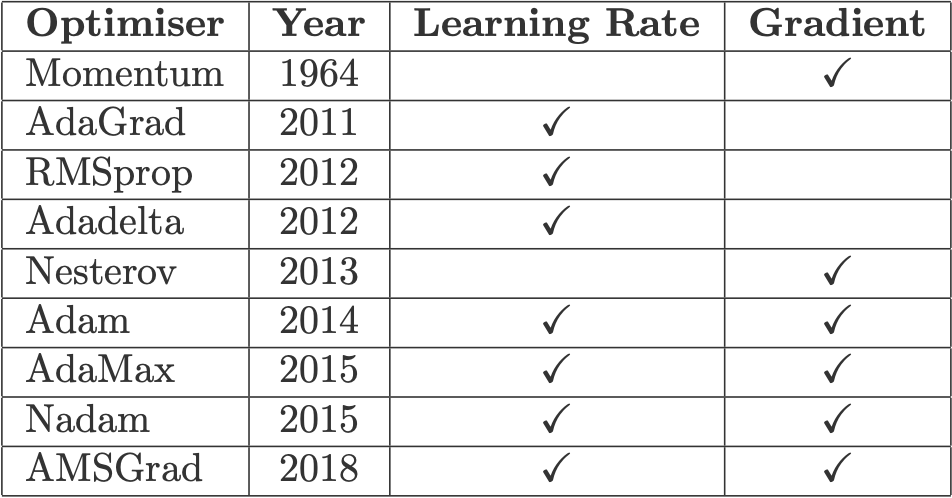

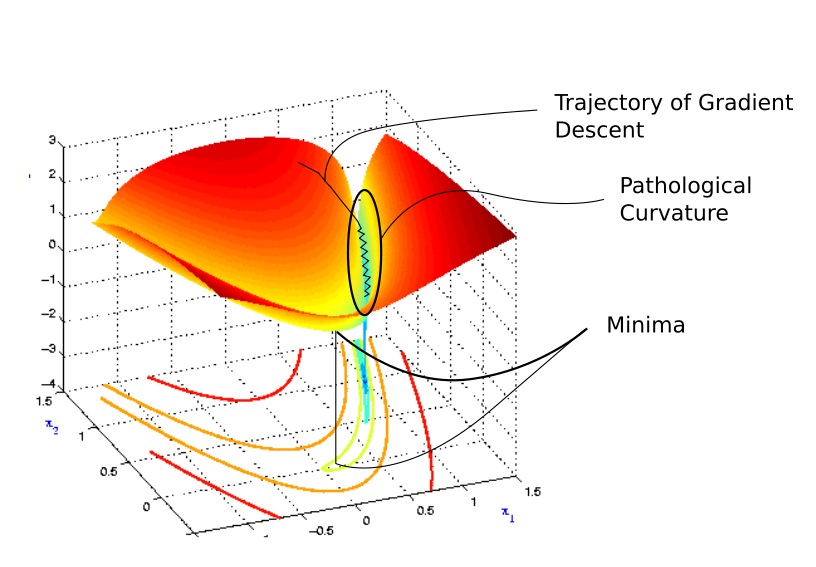

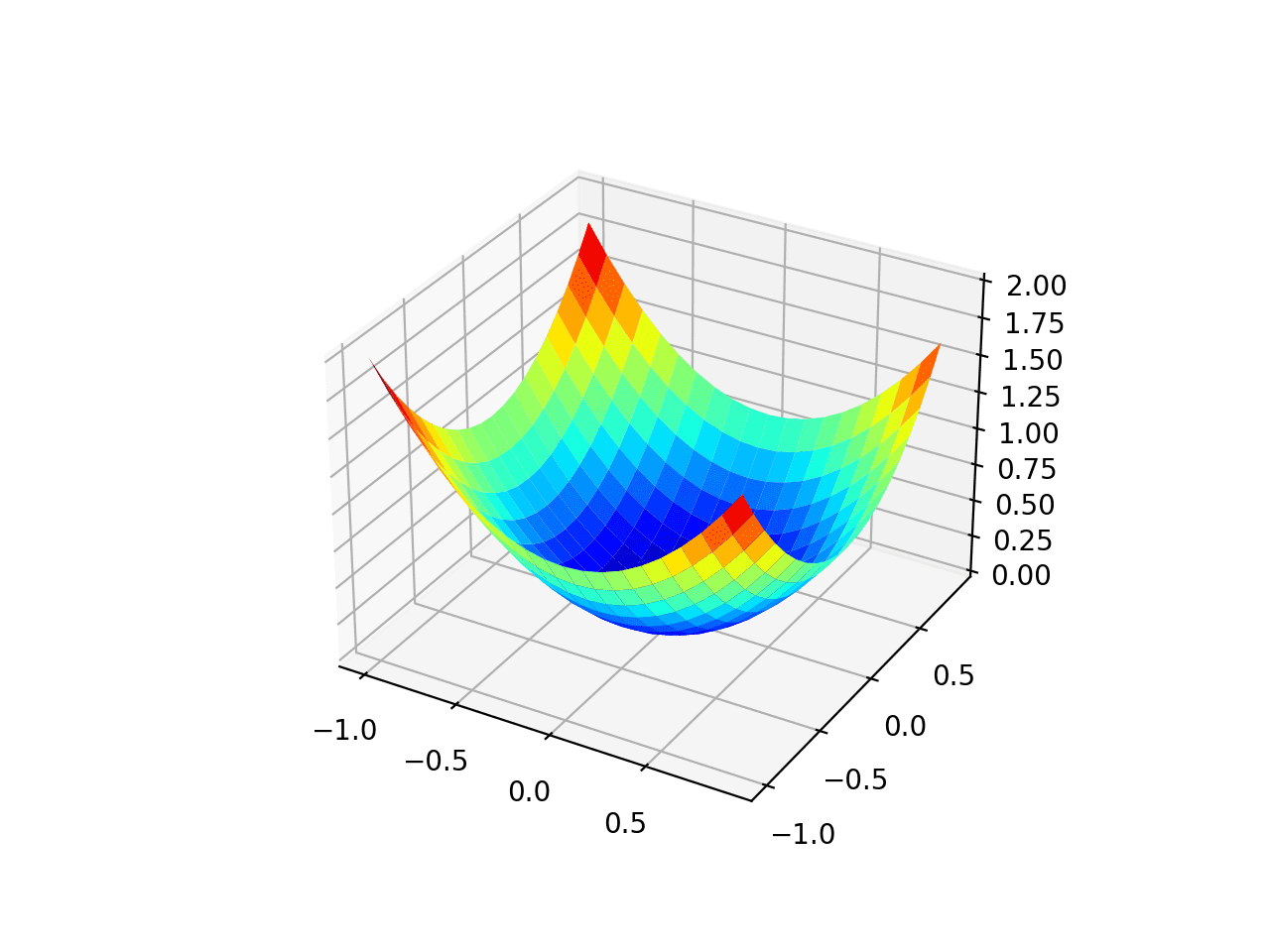

10 Stochastic Gradient Descent Optimisation Algorithms + Cheatsheet | by Raimi Karim | Towards Data Science

![PDF] A Sufficient Condition for Convergences of Adam and RMSProp | Semantic Scholar PDF] A Sufficient Condition for Convergences of Adam and RMSProp | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/27f197e0401b854d14a41829e09209e38fe920b6/8-Figure3-1.png)

![PDF] Variants of RMSProp and Adagrad with Logarithmic Regret Bounds | Semantic Scholar PDF] Variants of RMSProp and Adagrad with Logarithmic Regret Bounds | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/431b37402a5ccf52ee48d2ca2bcaaec54a827b08/7-Figure2-1.png)

![PDF] Variants of RMSProp and Adagrad with Logarithmic Regret Bounds | Semantic Scholar PDF] Variants of RMSProp and Adagrad with Logarithmic Regret Bounds | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/431b37402a5ccf52ee48d2ca2bcaaec54a827b08/7-Figure1-1.png)